How technology companies grapple with complex issues is being heavily scrutinized, and often, without important context. There is a lot more to the story. What is getting lost in this discussion is some of the important progress we’ve made as a company and the positive impact that it is having across many key areas.

We firmly believe that ongoing research and candid conversations about our impact are some of the most effective ways to identify emerging issues and get ahead of them. This doesn’t mean we find and fix every problem right away. But because of this approach, together with other changes, we have made significant progress across a number of important areas, including privacy, safety and security, to name a few. Just as the world has changed a lot, so has Facebook.

In the past, we didn’t address safety and security challenges early enough in the product development process. Instead, we made improvements reactively in response to a specific abuse. But we have fundamentally changed that approach. Today, we embed teams focusing specifically on safety and security issues directly into product development teams, allowing us to address these issues during our product development process, not after it. Products also have to go through an Integrity Review process, similar to the Privacy Review process, so we can anticipate potential abuses and build in ways to mitigate them. Here are a few examples of how far we’ve come.

Safety and Security

Some of the most important changes we’ve made in recent years have been in prioritizing safety and security. As a result:

- Today we have 40,000 people working on safety and security, and have invested more than $13 billion in teams and technology in this area since 2016.

- Since 2017, Facebook’s security teams have disrupted and removed more than 150 covert influence operations, both foreign and domestic, helping prevent similar abuse.

- Our advanced AI has helped us block 3 billion fake accounts in the first half of this year.

- Our AI systems have gotten better at keeping people safer on our platform, for example by proactively removing content that violates our standards on hate speech. We now remove 15X more of such content across Facebook and Instagram than when we first began reporting it in 2017.

- Since 2019, we’ve started using technology that understands the same concept in multiple languages — and applies learnings from one language to improve its performance in others.

We have also changed our approach to protecting people’s privacy as a company. This includes investing in and expanding our Privacy Checkup, which today is used by tens of millions of people every month to manage their settings and control their experience on Facebook, and launching tools like Off-Facebook Activity and Why Am I Seeing This? that show people how their information is used and let them more easily manage settings.

Combating Misinformation

Misinformation has been a challenge on and off the internet for many decades. People are understandably concerned about how it will be handled for future internet technologies. At Facebook, we’ve begun addressing this comprehensively — rather than treating it as a single problem with a single solution. This means we’ve gotten better at addressing this complex challenge. We’ve worked to develop and expand our systems to reduce misinformation and promote reliable information. As a result:

- We remove false and harmful content that violates our Community Standards, including more than 20 million pieces of false COVID-19 and vaccine content.

- We’ve built a global network of more than 80 independent fact-checking partners who rate the accuracy of posts covering more than 60 languages across our apps.

- We’ve displayed warnings on more than 190 million pieces of COVID-related content on Facebook that our fact-checking partners rated as false, partly false, altered or missing context.

- We’ve helped over 2 billion people find credible COVID-19 information through our COVID-19 Information Center and News Feed pop-ups — and more than 140 million people visited our US 2020 Voting Information Center.

Innovating More Responsibly

Most importantly, we’ve also changed not just what we build but how we build so that when we launch new products, they are more likely to have effective privacy, security and safety protections already built in. For example:

- This year when we rolled out Live Audio Rooms, we built them with integrated safety and integrity measures:

- We prohibit people who have reached a certain threshold of Community Standards violations from creating or speaking in Live Audio Rooms.

- We give room hosts the ability to demote speakers to listeners or block people from joining their room entirely.

- We give speakers and listeners the ability to report the host.

- We also launched Facebook Horizon in beta last year with a number of new features that put people in control of their experience in VR.

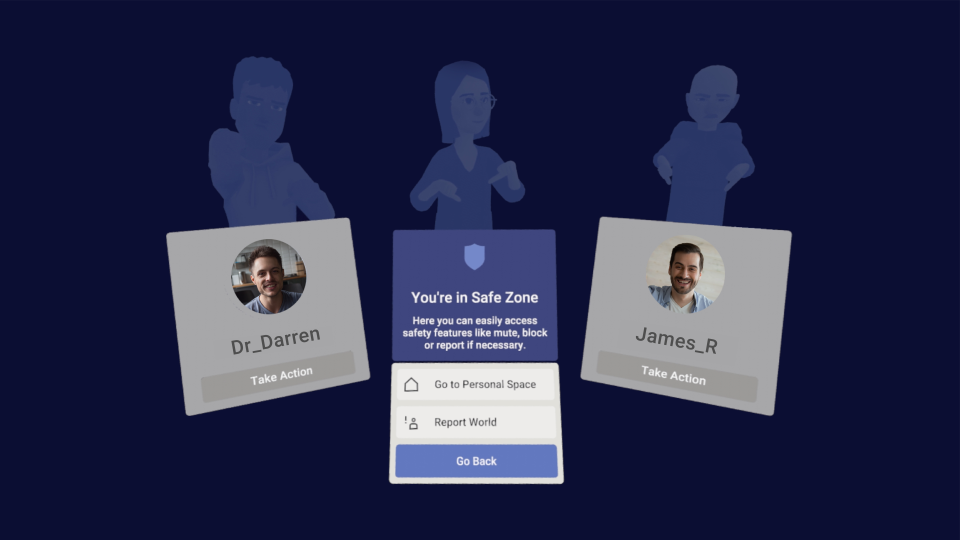

- We introduced Safe Zone, which lets people take a break from their surroundings and then block, mute or report.

- And we introduced a new feature in Horizon that makes it easier to submit reports, since we know it’s difficult to record a painful incident while it’s happening.

You can read more about the direction of our responsible innovation efforts from Margaret Stewart, VP of Product Design & Responsible Innovation at Facebook.

Yes, we’ve made progress. But we also know that there will always be examples of things we miss and things we take down by mistake. There is no perfect here. Collaborating with experts, policymakers and others has made us better, and continued collaboration will be key to making sure our progress continues. And that’s our plan.

Read more about our efforts on our new page, which features updated information and figures, to give a sense of where things have improved and where we still have more work to do. Our Transparency Center is also a comprehensive destination for our integrity and transparency efforts. Also, see a timeline of our integrity efforts since 2016.

For more, visit about.facebook.com/progress.