Note: For future Facebook updates about Oversight Board cases, please visit the Transparency Center.

Today, the Oversight Board published their decisions on the first set of cases they chose to review. We will implement these binding decisions in accordance with the bylaws and have already restored the content in three of the cases as mandated by the Oversight Board. We restored the breast cancer awareness post last year, as it did not violate our policies and was removed in error.

Given that we are in the midst of a global pandemic, we feel it’s important to briefly comment on the decision in the COVID-19 case. The board rightfully raises concerns that we can be more transparent about our COVID-19 misinformation policies. We agree that these policies could be clearer and intend to publish updated COVID-19 misinformation policies soon. We do believe, however, that it is critical for everyone to have access to accurate information, and our current approach in removing misinformation is based on extensive consultation with leading scientists, including from the CDC and WHO. During a global pandemic this approach will not change.

Included with the board’s decisions are numerous policy advisory statements. According to the bylaws we will have up to 30 days to fully consider and respond to these recommendations. We believe that the board included some important suggestions that we will take to heart. Their recommendations will have a lasting impact on how we structure our policies.

We look forward to continuing to receive the board’s decisions in the years to come. For more information about what happens next in the process now that we have received these first decisions from the Oversight Board, please see the FAQ below.

Questions & Answers

Now that we have the Oversight Board’s decisions, what are the next steps for Facebook?

Since we just received the board’s decisions a short time ago, we will need time to understand the full impact of their decisions. We will update the Newsroom posts about each case within 30 days to explain how we have considered the policy recommendations, including whether we will put them through our policy development process.

Some of today’s recommendations include suggestions for major operational and product changes to our content moderation — for example allowing users to appeal content decisions made by AI to a human reviewer. We expect it to take longer than 30 days to fully analyze and scope these recommendations.

Are the Oversight Board’s decisions binding?

Yes. Today’s decisions (and future board decisions) are binding on Facebook, and we will restore or remove content based on their determination. The board’s policy recommendations are advisory, and we will look to them for guidance in modifying and developing our policies.

What will you do about content which is the same or similar to content which the board ruled on?

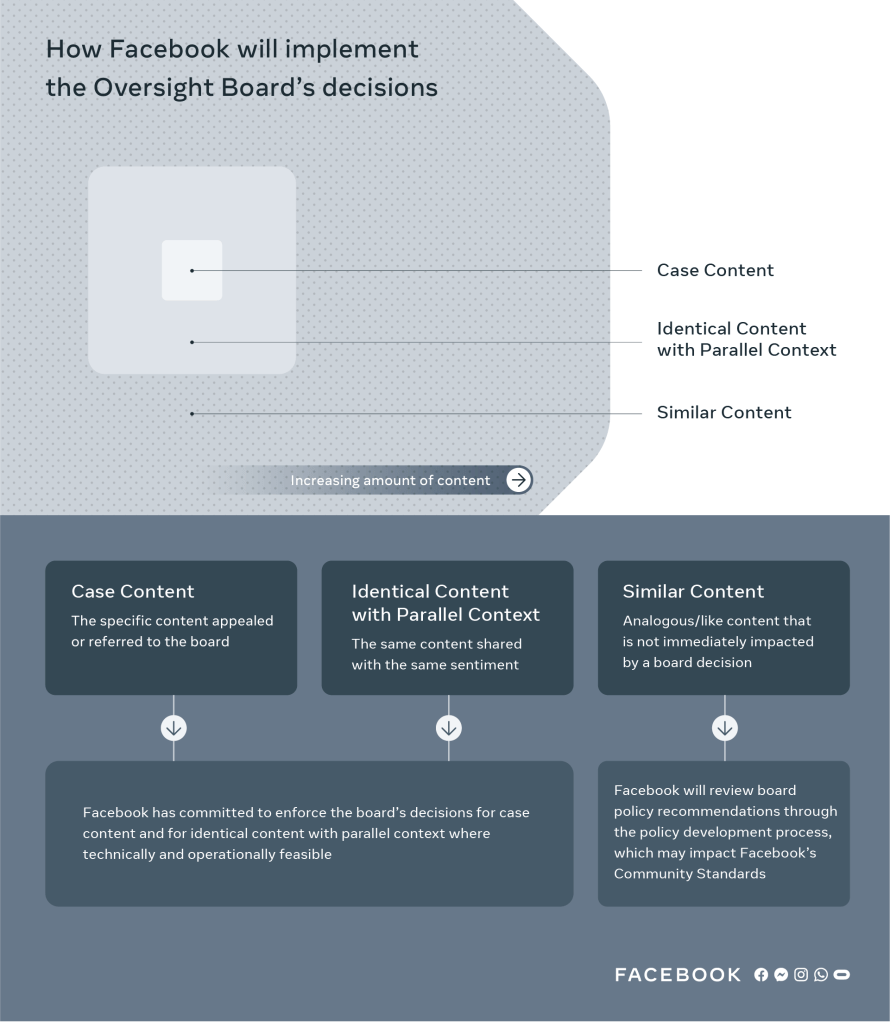

When it is feasible to do so, we will implement the board’s decision across content that is identical and made with similar sentiment and context. See below for more detail.

How many pieces of content are you taking action on as a result of the board’s decisions today?

We cannot provide a number right now since we are still reviewing the decisions. We’ve taken action on the individual pieces of content the board has decided on. Our teams are also reviewing the board’s decisions to determine where else they should apply to content that’s identical or similar.

Sharing More Details on How We Will Implement the Oversight Board’s Decisions

How will a decision by the Oversight Board be implemented?

There are several phases to fully implement a decision by the Oversight Board. The board’s decision will impact content on the platform in two ways — through the binding aspects of the decision itself, and through any additional guidance or recommendation the board includes.

Case Content: As illustrated in the graphic above, we begin implementing the decision by taking action on the case content. Per the bylaws, we will do so within seven days of the board’s decision.

Identical Content with Parallel Context: Facebook will implement the Oversight Board’s decision across identical content with parallel context if it exists. First, Facebook will use the board’s decision to define parallel context. For example, if we see another post using the same image that the board decided should be removed, we may also remove that post if it is shared with the same sentiment. If the post has the same image but a different context (for example the post condemns the image rather than supports it) this would not be considered parallel context and we would leave it on the platform.

In order to take action on identical context with parallel context, Facebook’s policy team will first analyze the board’s decision to determine what constitutes identical content in each case. It will then determine the context in which the board’s decision should also apply to the identical content. One key element of the analysis involves reassessing the case content’s scope and severity based on the board’s decision.

Next, Facebook’s operations team, who enforce our policies, will investigate how the decision can be scaled. There are some limitations for removing seemingly identical content including when it is similar, but not similar enough for our systems to always identify it. The operations team will ensure that new posts using the content are either allowed to remain on the platform, or are taken down, depending on the board’s decision.

After this step is completed, we will update the case specific Newsroom Post with our follow up actions on this content.

Similar Content: Similar content means content that is not immediately impacted by the Oversight Board’s decision (the content is not identical or the context is not the parallel), but that raises the same questions around Facebook policies. If the Oversight Board issues a policy advisory statement with its decision, or makes a decision in response to a Facebook request for a policy advisory opinion, Facebook will review policy recommendations or advisory statements, and publicly respond within 30 days to explain how it will approach the recommendation. First Facebook’s policy team will review the recommendation from the board, and will decide if the recommendation should go to the Policy Forum for further review and to potentially change Facebook’s Community Standards or Instagram’s Community Guidelines.

After these steps are completed, Facebook will update the case specific Newsroom post and, in instances where the recommendation is considered at the Policy Forum, document the process in the Policy Forum minutes. Facebook has committed to considering the board’s recommendation through its policy development process. The policy development process is a large part of how we envision that the board’s decisions will have lasting influence over our policies, procedures and practices.