By Vanessa Callison-Burch, Product Manager, Jennifer Guadagno, Researcher, and Antigone Davis, Head of Global Safety

There is one death by suicide in the world every 40 seconds, and suicide is the second leading cause of death for 15-29 year olds. Experts say that one of the best ways to prevent suicide is for those in distress to hear from people who care about them.

Facebook is in a unique position — through friendships on the site — to help connect a person in distress with people who can support them. It’s part of our ongoing effort to help build a safe community on and off Facebook.

Today we’re updating the tools and resources we offer to people who may be thinking of suicide, as well as the support we offer to their concerned friends and family members:

- Integrated suicide prevention tools to help people in real time on Facebook Live

- Live chat support from crisis support organizations through Messenger

- Streamlined reporting for suicide, assisted by artificial intelligence

Already on Facebook if someone posts something that makes you concerned about their well-being, you can reach out to them directly or report the post to us. We have teams working around the world, 24/7, who review reports that come in and prioritize the most serious reports like suicide. We provide people who have expressed suicidal thoughts with a number of support options. For example, we prompt people to reach out to a friend and even offer pre-populated text to make it easier for people to start a conversation. We also suggest contacting a help line and offer other tips and resources for people to help themselves in that moment.

Suicide prevention tools have been available on Facebook for more than 10 years and were developed in collaboration with mental health organizations such as Save.org, National Suicide Prevention Lifeline, Forefront and Crisis Text Line, and with input from people who have personal experience thinking about or attempting suicide. In 2016 we expanded the availability of the latest tools globally — with the help of over 70 partners around the world — and improved how they work based on new technology and feedback from the community.

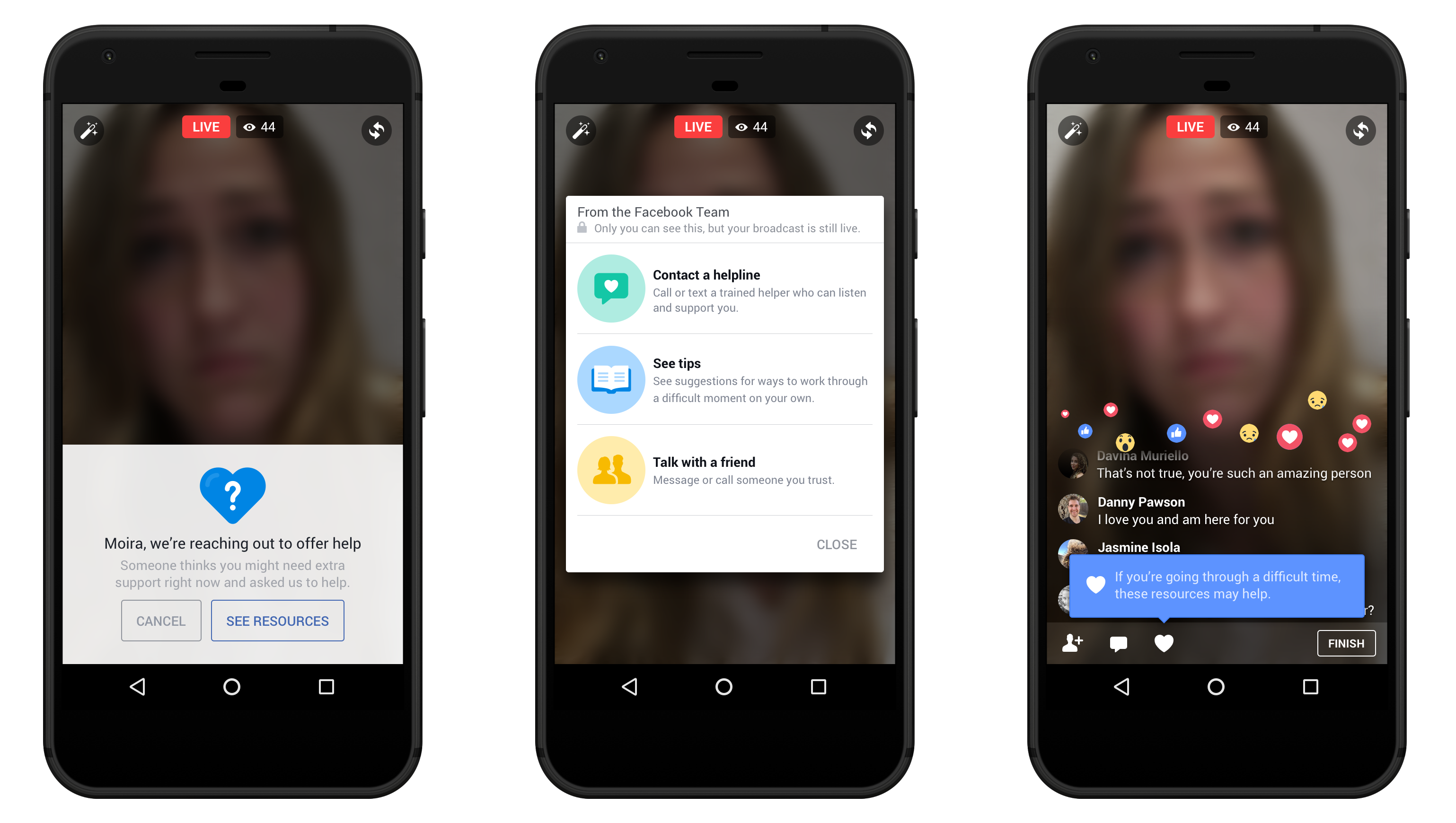

Supporting Someone on Facebook Live

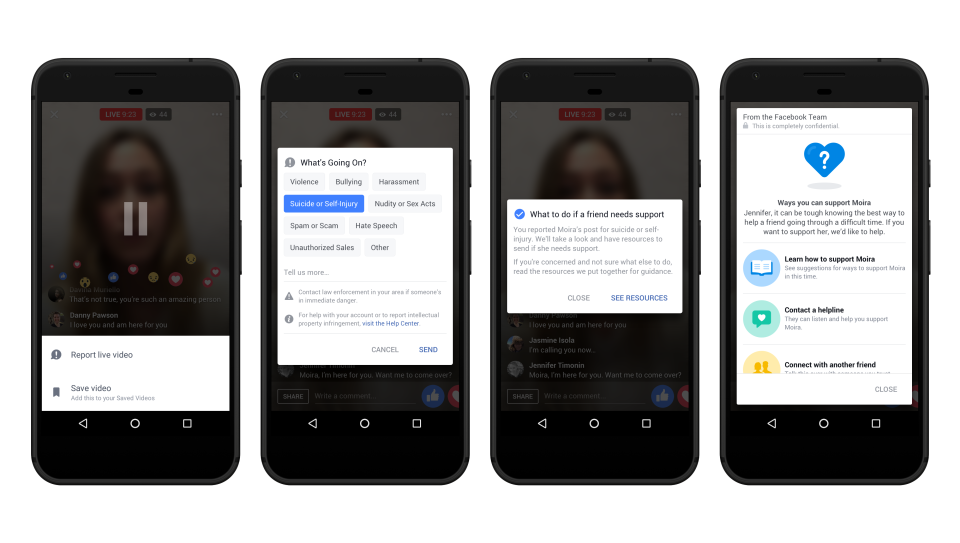

- Our suicide prevention tools for Facebook posts will now be integrated into Facebook Live. People watching a live video have the option to reach out to the person directly and to report the video to us. We will also provide resources to the person reporting the live video to assist them in helping their friend.

- The person sharing a live video will see a set of resources on their screen. They can choose to reach out to a friend, contact a help line or see tips. If you or someone you know is in crisis, it is important to call local emergency services right away. You can also visit our Help Center for information about how to support yourself or a friend.

Empowering Crisis Support Partners

Partners are key to our work in suicide prevention and mental health support.

- We recently added the ability for people to connect with our crisis support partners over Messenger. Now people will see the option to message with someone in real time directly from the organization’s Page or through our suicide prevention tools.

- Participating organizations include Crisis Text Line, the National Eating Disorder Association and the National Suicide Prevention Lifeline.

- This test will expand over the next several months, ensuring the organizations can support any new volume of communication. Zendesk donated some of the company’s backend tools to make this integration possible.

- Today, we are also launching a video campaign with partner organizations across the globe to raise awareness about ways to help a friend in need.

Making Reporting Easier

We work to address posts expressing thoughts of suicide as quickly and accurately as possible.

- Based on feedback from experts, we are testing a streamlined reporting process using pattern recognition in posts previously reported for suicide. This artificial intelligence approach will make the option to report a post about “suicide or self injury” more prominent for potentially concerning posts like these.

- We’re also testing pattern recognition to identify posts as very likely to include thoughts of suicide. Our Community Operations team will review these posts and, if appropriate, provide resources to the person who posted the content, even if someone on Facebook has not reported it yet.

- We are starting this limited test in the US and will continue working closely with suicide prevention experts to understand other ways we can use technology to help provide support.

Suicide prevention is one way we’re working to build a safer community on Facebook. With the help of our partners and people’s friends and family members on Facebook, we’re hopeful we can support more people over time.