Our Open Source Approach

Open source AI has the potential to unlock unprecedented technological progress. It levels the playing field, giving people access to powerful and often expensive technology for free, which enables competition and innovation that produce tools that benefit individuals, society and the economy. Open sourcing AI is not optional; it is essential for cementing America’s position as a leader in technological innovation, economic growth and national security. In this fiercely competitive global landscape, the race to develop robust AI ecosystems is intensifying, driving rapid innovation and superior solutions. By championing a balanced approach to risk assessment that encourages innovation, the U.S. can ensure that its AI development remains competitive, thereby securing the transformative benefits of AI for the future.

The Framework

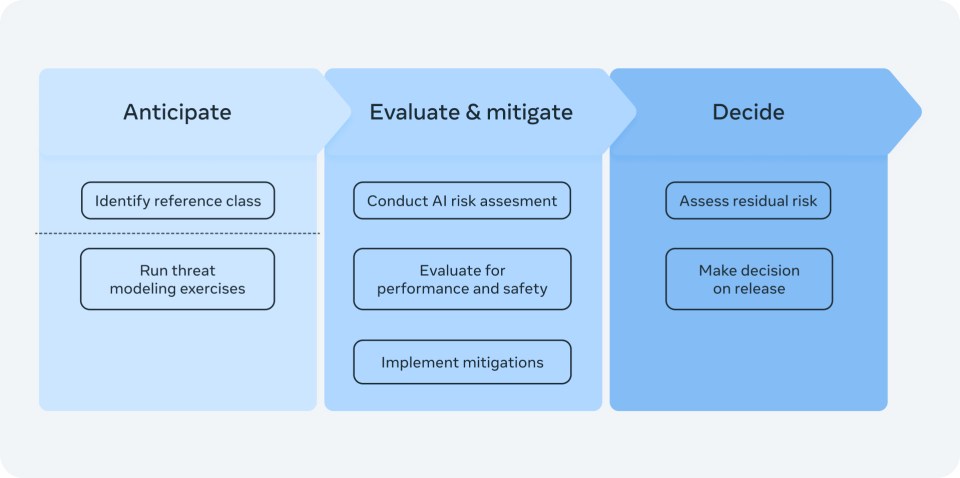

Our Frontier AI Framework focuses on the most critical risks in the areas of cybersecurity threats and risks from chemical and biological weapons. By prioritizing these areas, we can work to protect national security while promoting innovation. Our framework outlines a number of processes we follow to anticipate and mitigate risk when developing frontier AI systems, for example:

- Identifying catastrophic outcomes to prevent: Our framework identifies potential catastrophic outcomes related to cyber, chemical and biological risks that we strive to prevent. It focuses on evaluating whether these catastrophic outcomes are enabled by technological advances and, if so, identifying ways to mitigate those risks.

- Threat modeling exercises: We conduct threat modeling exercises to anticipate how different actors might seek to misuse frontier AI to produce those catastrophic outcomes, working with external experts as necessary. These exercises are fundamental to our outcomes-led approach.

- Establishing risk thresholds: We define risk thresholds based on the extent to which our models facilitate the threat scenarios. We have processes in place to keep risks within acceptable levels, including applying mitigations.

Our open source approach also helps us to better anticipate and mitigate risk because it enables us to learn from the broader community’s independent assessments of our models’ capabilities. This process improves the efficacy and trustworthiness of our models and contributes to better risk evaluation in the field. In addition to evaluating catastrophic risk, our framework takes into account the benefits of the technology we’re developing.

Looking Ahead

While the focus of this framework is to help mitigate severe risks, it’s important to remember that the primary reason we’re developing these technologies is because they have the potential to greatly benefit society.

We hope that by sharing our current approach to responsible development of advanced AI systems, we can provide insight into our decision-making processes and advance discussions and research about how to improve AI evaluation, with regards to both risks and benefits. As AI technology progresses, we will continue to evolve and refine our approach.