Imagine a professional musician being able to explore new compositions without having to play a single note on an instrument. Or a small business owner adding a soundtrack to their latest video ad on Instagram with ease. That’s the promise of AudioCraft — our latest AI tool that generates high-quality, realistic audio and music from text.

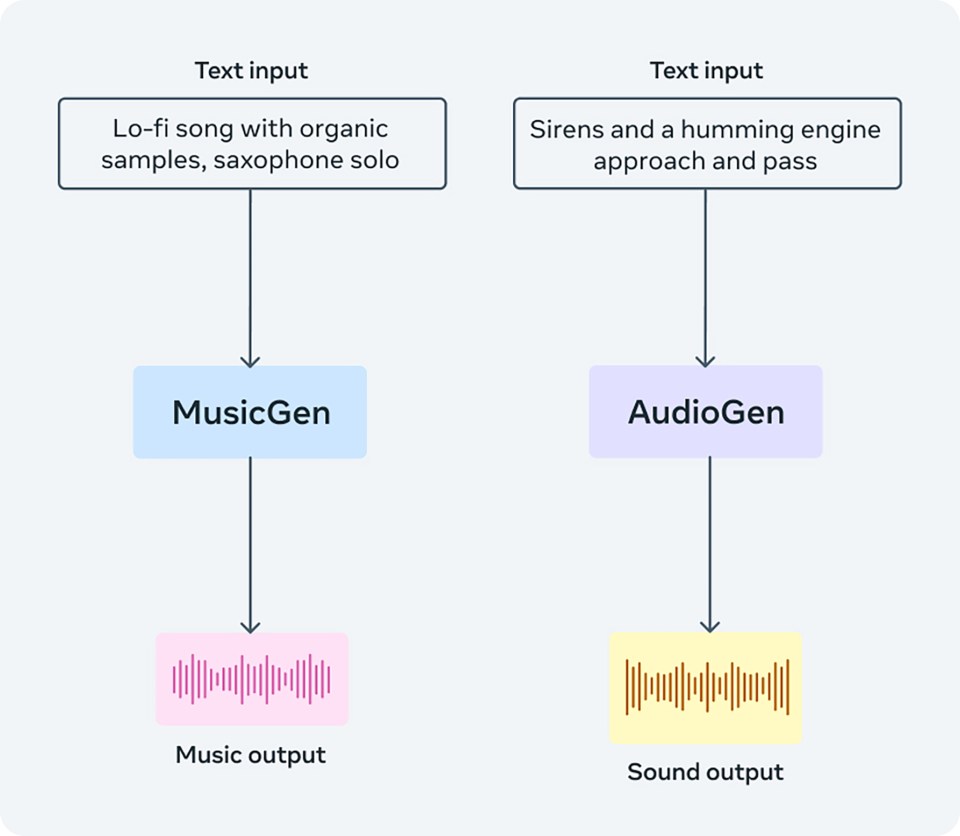

AudioCraft consists of three models: MusicGen, AudioGen and EnCodec. MusicGen, which was trained with Meta-owned and specifically licensed music, generates music from text prompts, while AudioGen, which was trained on public sound effects, generates audio from text prompts. Today, we’re excited to release an improved version of our EnCodec decoder, which allows higher quality music generation with fewer artifacts. We’re also releasing our pre-trained AudioGen models, which let you generate environmental sounds and sound effects like a dog barking, cars honking, or footsteps on a wooden floor. And lastly, we’re sharing all of the AudioCraft model weights and code.

We’re open-sourcing these models, giving researchers and practitioners access so they can train their own models with their own datasets for the first time, and help advance the field of AI-generated audio and music.

While we’ve seen a lot of excitement around generative AI for images, video, and text, audio has seemed to lag a bit behind. There’s some work out there, but it’s highly complicated and not very open, so people aren’t able to readily play with it. Generating high-fidelity audio of any kind requires modeling complex signals and patterns at varying scales. Music is arguably the most challenging type of audio to generate as it’s composed of local and long-range patterns, from a suite of notes to a global musical structure with multiple instruments.

The AudioCraft family of models are capable of producing high-quality audio with long-term consistency, and they’re easy to use. With AudioCraft, we simplify the overall design of generative models for audio compared to prior work in the field — giving people the full recipe to play with the existing models that Meta has been developing over the past several years while also empowering them to push the limits and develop their own models.

AudioCraft works for music, sound, compression, and generation — all in the same place. Because it’s easy to build on and reuse, people who want to build better sound generators, compression algorithms, or music generators can do it all in the same code base and build on top of what others have done.

Having a solid open source foundation will foster innovation and complement the way we produce and listen to audio and music in the future. With even more controls, we think MusicGen can turn into a new type of instrument — just like synthesizers when they first appeared.

We see the AudioCraft family of models as tools for musicians and sound designers to provide inspiration, help people quickly brainstorm and iterate on their compositions in new ways. We can’t wait to see what people create with Audiocraft.

Learn more about AudioCraft on our AI blog.