Today, we’re publishing our quarterly reports for the third quarter of 2022 and our biannual Transparency Reports for the first half of 2022. They include:

- The Community Standards Enforcement Report

- The Adversarial Threat Report

- The Widely Viewed Content Report

- The Oversight Board Quarterly Update

- Biannual Transparency Report including:

These reports are all available in the Transparency Center. Here are the key highlights from each one:

Community Standards Enforcement Report Highlights

While our integrity efforts are always evolving, our goal is to reduce the violating content people see while making fewer mistakes. This means ensuring we take action on content that violates our policies while being more accurate and detecting nuance so that we aren’t taking down things incorrectly, like jokes between friends.

Our actions against hate speech-related content decreased from 13.5 million to 10.6 million in Q3 2022 on Facebook because we improved the accuracy of our AI technology. We’ve done this by leveraging data from past user appeals to identify posts that could have been removed by mistake without appropriate cultural context. For example, now we can better recognize humorous terms of endearment used between friends, or better detect words that may be considered offensive or inappropriate in one context but not another. As we improved this accuracy, our proactive detection rate for hate speech also decreased from 95.6% to 90.2% in Q3 2022.

Similarly, our actions against content that incites violence decreased from 19.3 million to 14.4 million in Q3 2022 after our improved AI technology was able to better recognize language and emojis used in jest between friends. As we improved our accuracy on this front, our proactive rate for actioning this content decreased from 98.2% to 94.3% in Q3 2022.

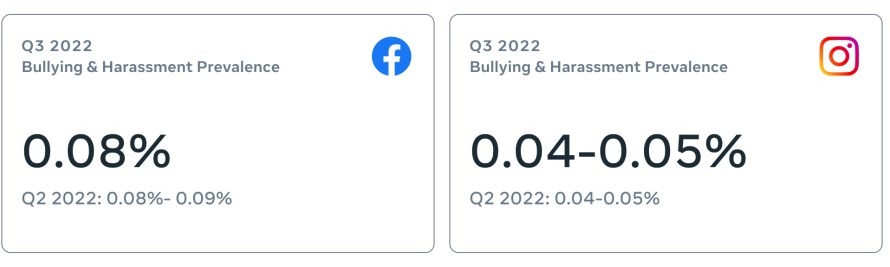

For bullying and harassment-related content, our proactive rate decreased in Q3 2022 from 76.7% to 67.8% on Facebook, and 87.4% to 84.3% on Instagram. This decrease was due to improved accuracy in our technologies (and a bug in our system that is now resolved).

Although our proactive rate dropped in a few areas, prevalence of harmful content on Facebook and Instagram remained relatively consistent between Q2 and Q3. We anticipate continued movement in these areas as our AI improves.

On Facebook in Q3, we took action on:

- 16.7 million pieces of content related to terrorism, an increase from 13.5 million in Q2. This increase was because non-violating videos were added incorrectly to our media-matching technology banks and were removed (though they were eventually restored).

- 4.1 million pieces of drug content, an increase from 3.9 million in Q2 2022, due to improvements made to our proactive detection technology.n

- 1.4 billion pieces of spam content, an increase from 734 million in Q2, due to an increased number of adversarial spam incidents in August.

On Instagram in Q3, we took action on:

-

2.2 million pieces of content related to terrorism, from 1.9 million in Q2, due to non-violating videos added incorrectly to our media-matching technology banks and were removed (though they were eventually restored).

- 2.5 million pieces of drug content, an increase from 1.9 million, due to improvements in our proactive detection technology.

Adversarial Threat Report

As part of our regular reporting, we’re sharing our third Adversarial Threat Report to provide a qualitative view into the adversarial threats we tackle globally. Last quarter, we investigated and took down three separate covert influence operations in the United States, China and Russia for violating our policy against coordinated inauthentic behavior. Two of these networks (China and Russia) were originally reported on September 27, 2022. Read the full report.

Widely Viewed Content Report Highlights

Insights from the WVCR continue to inform how we evolve our products and policies. This includes developing new policies, where needed, to address harmful or otherwise low-quality content, and making changes to ranking that have reduced views of problematic content and prevented it from reaching a wide audience. This quarter’s top content did not contain any policy violating content, and we’re cautiously optimistic of the progress we’ve made as we work to improve the quality of content within Facebook. We continue to rigorously work to understand the content ecosystem and evaluate the effectiveness of our policies and integrity measures – closing gaps as we find them. Read more about the report.

Working with External Experts

Our transparency reports allow the public to hold us accountable and help us improve how we talk about our work. We are also committed to undertaking and releasing independent, third-party assessments for our processes, policies and metrics.

Earlier this year, we released an EY assessment of our Community Standards Enforcement Report, which concluded that the calculation of the metrics in the report were fairly stated, and that our internal controls are suitably designed and operating effectively. We are also committed to independent oversight and support the Media Rating Council (MRC) as the auditor of our monetized solutions in advertising. Earlier this month, we announced that we received accreditation from the MRC for content-level Brand Safety on Facebook.

This quarter, we updated and expanded the section of the Transparency Center on how we engage with stakeholders, including new examples of how their feedback has contributed to the development of our content policies.

An Update on Governance

The Oversight Board continues to be a valuable source of external perspective and accountability for Meta. The Board’s recommendations help us with our approach to content moderation by driving thoughtful improvements to our policies, operations and products. We respond to every recommendation publicly and have committed to implement or explore the feasibility of implementing 75 percent of those recommendations to date.

We also recently announced a community forum taking place in December, which will bring together nearly 6,000 people from 32 countries to discuss conduct in the metaverse. Community forums bring together diverse groups of people to discuss tough issues, consider hard choices and share their perspectives on a set of recommendations. Initiatives like this are part of how we’re exploring new forms of governance, not only to help decentralize decision-making, but also include diverse perspectives in the process.