- Harmful content can evolve quickly, so we built new AI technology that can adapt more easily to take action on new or evolving types of harmful content faster.

- This new AI system uses “few-shot learning,” starting with a general understanding of a topic and then uses much fewer labeled examples to learn new tasks.

Harmful content continues to evolve rapidly — whether fueled by current events or by people looking for new ways to evade our systems — and it’s crucial for AI systems to evolve alongside it. But it typically takes several months to collect and label thousands, if not millions, of examples necessary to train each individual AI system to spot a new type of content.

To tackle this, we’ve built and recently deployed Few-Shot Learner (FSL), an AI technology that can adapt to take action on new or evolving types of harmful content within weeks instead of months. This new AI system uses a method called “few-shot learning,” in which models start with a general understanding of many different topics and then use much fewer — or sometimes zero — labeled examples to learn new tasks. FSL can be used in more than 100 languages and learns from different kinds of data, such as images and text. This new technology will help augment our existing methods of addressing harmful content.

Our new system works across three different scenarios, each of which require varying levels of labeled examples:

- Zero-shot: Policy descriptions with no examples

- Few-shot with demonstration: Policy descriptions with a small set of examples (n<50)

- Low-shot with fine-tuning: ML developers can fine-tune the FSL base model with a low number of training examples

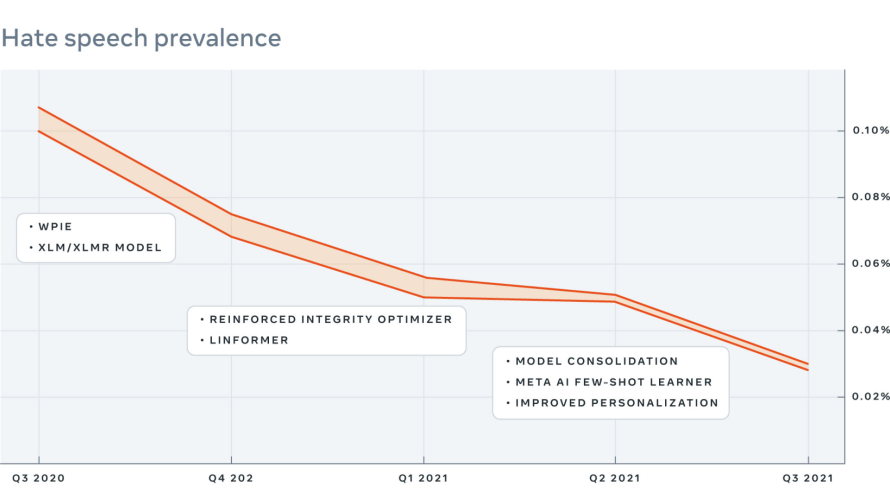

We’ve tested FSL on a few recent events. For example, one recent task was to identify content that shares misleading or sensationalized information discouraging COVID-19 vaccinations (such as “Vaccine or DNA changer?”). In a separate task, the new AI system improved an existing classifier that flags content that comes close to inciting violence (for example, “Does that guy need all of his teeth?”). The traditional approach may have missed these types of inflammatory posts since there aren’t many labeled examples that use “DNA changer” to create vaccine hesitancy or references to teeth to imply violence. We’ve also seen that, in combination with existing classifiers along with efforts to reduce harmful content, ongoing improvements in our technology and changes we made to reduce problematic content in News Feed, FSL has helped reduce the prevalence of other harmful content like hate speech.

We believe that FSL can, over time, enhance the performance of all of our integrity AI systems by letting them leverage a single, shared knowledge base and backbone to deal with many different types of violations. There’s a lot more work to be done, but these early production results are an important milestone that signals a shift toward more intelligent, generalized AI systems.

Learn more about our Meta AI Few-Shot Learner on our AI blog.