- We think focusing on prevalence, the amount of hate speech people actually see on the platform — and how we reduce it using all of our tools — is the most important measure.

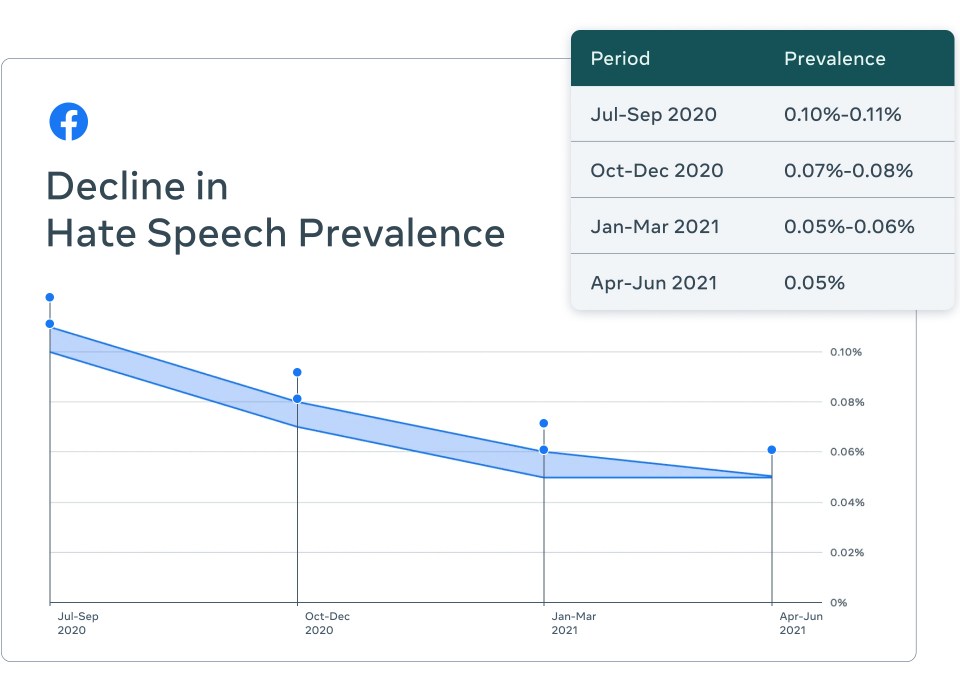

- Our technology is having a big impact on reducing how much hate speech people see on Facebook. According to our latest Community Standards Enforcement Report, its prevalence is about 0.05% of content viewed, or about 5 views per every 10,000, down by almost 50% in the last three quarters.

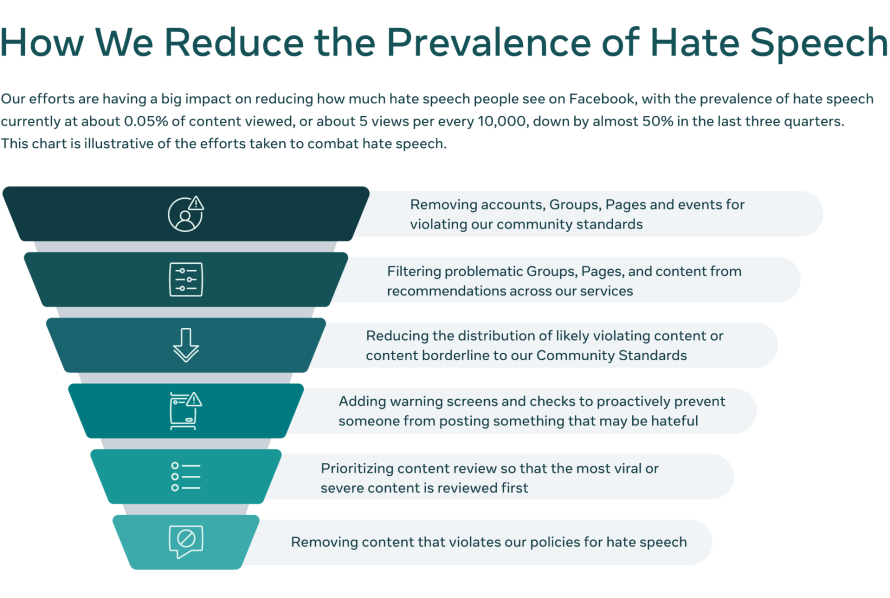

- We use technology to reduce the prevalence of hate speech in several ways: It helps us proactively detect it, route it to our reviewers, and remove it when it violates our policies. It also allows us to reduce the distribution of likely violating content. All of these tools working together is what makes an impact on prevalence.

- In 2016, our content moderation efforts relied mainly on user reports. So we built technology to proactively identify violating content before people report it. Our proactive detection rate reflects this. However, we report prevalence figures so we can be judged on how much hate speech is actually seen on our apps.

Data pulled from leaked documents is being used to create a narrative that the technology we use to fight hate speech is inadequate and that we deliberately misrepresent our progress. This is not true. We don’t want to see hate on our platform, nor do our users or advertisers, and we are transparent about our work to remove it. What these documents demonstrate is that our integrity work is a multi-year journey. While we will never be perfect, our teams continually work to develop our systems, identify issues and build solutions.

Recent reporting suggests that our approach to addressing hate speech is much narrower than it actually is, ignoring the fact that hate speech prevalence has dropped to 0.05%, or 5 views per every 10,000 on Facebook. We believe prevalence is the most important metric to use because it shows how much hate speech is actually seen on Facebook.

Focusing just on content removals is the wrong way to look at how we fight hate speech. That’s because using technology to remove hate speech is only one way we counter it. We need to be confident that something is hate speech before we remove it. If something might be hate speech but we’re not confident enough that it meets the bar for removal, our technology may reduce the content’s distribution or won’t recommend Groups, Pages or people that regularly post content that is likely to violate our policies. We also use technology to flag content for more review.

We have a high threshold for automatically removing content. If we didn’t, we’d risk making more mistakes on content that looks like hate speech but isn’t, harming the very people we’re trying to protect, such as those describing experiences with hate speech or condemning it.

Another metric being misconstrued is our proactive detection rate, which tells us how good our technology is at finding content before people report it to us. It tells us, of the content we remove, how much we found ourselves. In 2016, the vast majority of our content removals were based on what users reported to us. We knew we needed to do better and so we began building technology to identify potentially violating content without anyone flagging it to us.

When we began reporting our metrics on hate speech, only 23.6% of content we removed was detected proactively by our systems; the majority of what we removed was found by people. Now, that number is over 97%. But our proactive rate doesn’t tell us what we are missing and doesn’t account for the sum of our efforts, including what we do to reduce the distribution of problematic content. That’s why we focus on prevalence and consistently describe it as the most important metric. Prevalence tells us what violating content people see because we missed it. It’s how we most objectively evaluate our progress, as it provides the most complete picture. We talk about prevalence in our Community Standards Enforcement Report report every quarter and describe it in our Transparency Center.

Prevalence is how we measure our work internally, and that’s why we share the same metric externally. While we know our work will never be done in this space, the fact that prevalence has been reduced by almost 50% in the last three quarters shows that taken together, our efforts are having an impact. As reported in our Community Standards Enforcement Report, we can attribute a significant portion of the drop to our improved and expanded AI systems.

We’ve worked with international experts to develop our metrics. We’re also the only company which has volunteered to have them independently audited.

We include many metrics in our quarterly reports, which are the most comprehensive of their kind, to give people a more complete picture. We have worked with international experts in measurement, statistics and other areas to provide an independent, public assessment to make sure we are measuring the right things. While they broadly agreed with our approach, they also provided recommendations for how we can improve. You can read their full report here. We have also committed to undergoing an independent audit, with global auditing firm EY, to make sure we are measuring and reporting our metrics accurately.