Update on August 11, 2021 at 8:45AM PT:

Today, we announced new features to help protect people from abuse on Instagram. These include:

- Limits: We’re introducing a feature that will automatically hide comments and DM requests from people who don’t follow you, or who only recently followed you, to limit unwanted contact.

- Stronger warnings to discourage harassment: We already show a warning when someone tries to post a potentially offensive comment. And if they try to post potentially offensive comments multiple times, we show an even stronger warning that we may remove or hide their comment if they proceed. Now, rather than waiting for the second or third comment, we’ll show this stronger warning the first time.

- Combating abuse in DMs and comments: Hidden Words, which allows you to automatically filter offensive words, phrases and emojis into a Hidden Folder, will be available for everyone globally by the end of this month.

You can read more about these updates here.

Update on July 22, 2021 at 6:10AM PT:

Racism, abuse and harassment have no place on our apps. Recently Black footballers at the UEFA European Championship faced racist abuse and harassment on Instagram. It’s our responsibility to protect athletes — and everyone — from abuse on our apps, which means moving faster to remove violating content and equipping them with tools to keep them safe. (Updated on July 23, 2021 at 6:25AM PT to clarify our approach to removing violating content.)

So, ahead of the Olympic Games we’re sharing some updates on how we’re working to improve. These efforts build on the products and policy updates we outlined in our initial post below. They address both racism that athletes may face, and also other forms of hate, including sexism, homophobia and more. We’ll continue to evolve our approach and will share more updates soon.

Preventing Racist Abuse

A big part of how we prevent people from seeing abuse is identifying offensive content using technology. Since context is often crucial to spotting abusive language, we’re constantly training and improving our technology to catch more of this sooner. Two updates:

- Automatically Filtering Comments: We use artificial intelligence to automatically filter out comments from people you don’t follow that may be offensive or bullying. We’ve now expanded the list of potentially offensive words, hashtags and emojis that we automatically filter out of comments and will continue updating it frequently.

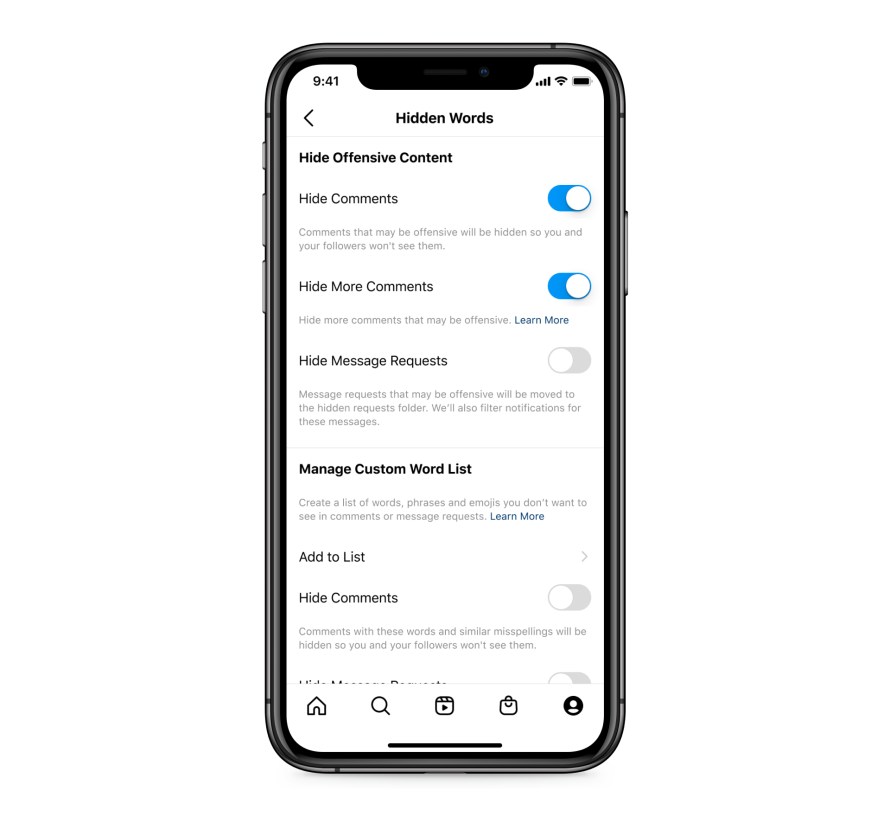

- Additional Filtering Tools: We’re starting to give people the ability to turn on “Hide More Comments” within their privacy settings on Instagram, so they can hide even more comments that may be potentially harmful, even when those comments may not break our rules.

Helping Athletes Use Our Safety Tools

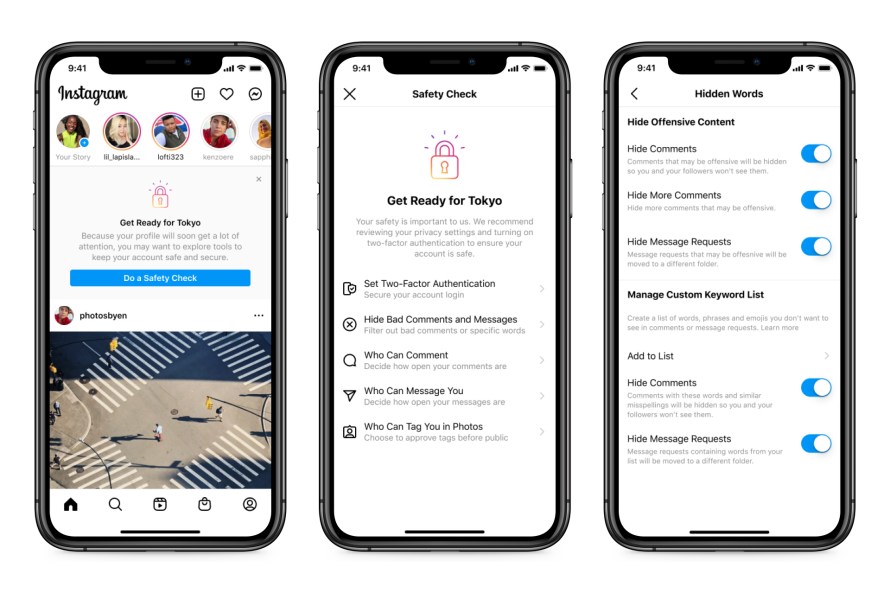

We’ve been rolling out new Instagram notifications at the top of athletes’ feeds to help them set up safety features like Hidden Words. We’ll continue to show this notification to Olympians and Paralympians throughout the summer.

Originally published on May 26, 2021 at 3:00AM PT:

Athletes will take center stage this summer during the UEFA European Championship, and the Olympic and Paralympic Games. Millions of people across the world will use our platforms to celebrate the athletes, countries and moments that make these events so iconic.

However, platforms like Facebook and Instagram mirror society. Everything that is good, bad and ugly in our world will find expression on our apps. So while we expect to see tons of positive engagement, we also know there might be those who misuse our platforms to abuse, harass and attack athletes competing this summer.

We don’t want this behavior on Facebook and Instagram, which is why we’ve recently introduced new measures to protect athletes. Here’s how we’re helping them keep their focus on goals and glory, not hateful DMs and comments.

Our Policies on Hate Speech, Abuse and Harassment

Hate speech is not allowed on our apps. Specifically, we don’t tolerate attacks on people based on their protected characteristics, including race or religion, as well as more implicit forms of hate speech. When we become aware of hate speech, we remove it. We’re investing heavily in people and technology to find and remove this content faster. We have tripled — to more than 35,000 — the people working on safety and security at Facebook and we’re a pioneer in artificial intelligence technology to remove hateful content at scale. Between January and March of this year, we took action on 25.2 million pieces of hate speech on Facebook, including in DMs, 96% of which we found before anyone reported it. And on Instagram, during the same time, we took action on 6.3 million pieces of hate speech content, 93% of it before anyone reported it.

We’ve also introduced stricter penalties for people who send abusive DMs on Instagram. When someone sends DMs that break our rules, we prohibit that person from sending any more messages for a set period of time. If someone continues to send violating messages, we’ll disable their account. We’ll also disable new accounts created to get around our messaging restrictions, and will continue to disable accounts we find that are created purely to send abusive messages.

Tools to Protect Athletes

We have also introduced tools to help athletes protect themselves from abuse. These include:

Dedicated Instagram Account for Athletes

We’re launching a dedicated Instagram account for athletes competing in Tokyo this summer. This private account will not only share Facebook and Instagram best practices, but also serve as a channel for athletes to send questions and flag issues to our team.

Instagram Direct Message Controls

Because DMs are private conversations, we don’t proactively look for content like hate speech or bullying the same way we do elsewhere on Instagram. That’s why last year we rolled out reachability controls, which allow people to limit who can send them a DM — such as only people who follow them.

For those who still want to interact with fans, but don’t want to see abuse, we’ve announced a new feature called “Hidden Words.” When turned on, this tool will automatically filter DM requests containing offensive words, phrases and emojis, so athletes never have to see them. We’ve worked with leading anti-discrimination and anti-bullying organizations to develop a predefined list of offensive terms that will be filtered from DM requests when the feature is turned on. We know different words can be hurtful to different people, so athletes will also have the option to create their own custom list of words, phrases or emojis that they don’t want to see in their DM requests. All DM requests that contain these offensive words, phrases, or emojis — whether from a custom list or the predefined list — will be automatically filtered into a separate hidden requests folder.

Instagram and Facebook Comment Controls

Instagram and Facebook “Comment Controls” let athletes control who can comment on their posts. Athletes also have options in their privacy settings to automatically hide offensive comments on Facebook and Instagram. With that on, we use technology to proactively filter out words and phrases that are commonly reported for harassment. Athletes can also add their own list of words, phrases or emojis, so they never have to see them in comments again.

Blocking

Athletes can block people on both Instagram or Facebook from seeing their posts or sending them messages. We’re also making it harder for someone already blocked on Instagram to contact them again through a new account. With this feature, whenever athletes decide to block someone on Instagram, they’ll have the option to both block their account and preemptively block new accounts that person may create.

Reminding Athletes of our Safety Tools

We’ll remind athletes competing in these events this summer of these tools via an Instagram notification that will be sent to the top of their Feeds. This notification will make it easier for athletes to learn how to adopt and use a number of our safety features, like two-factor authentication and comment and messaging controls.

We’re committed to doing everything we can to fight hate and discrimination on our platforms, but we also know these problems are bigger than us. We look forward to working with other companies, sports organizations, NGOs, governments and educators to help keep athletes safe.