Update on October 20, 2021 at 9:00AM PT:

Today, we’re announcing new measures to keep Facebook Groups safe.

To continue limiting the reach of people who break our rules, we’ll start demoting all Groups content from members who have broken our Community Standards, anywhere on Facebook. These demotions will get more severe as they accrue more violations.

This measure will help reduce the ability of members who break our rules from reaching others in their communities, and builds on the existing restrictions placed upon members who violate Community Standards. These current penalties include restricting their ability to post, comment, add new members to a group or create new groups.

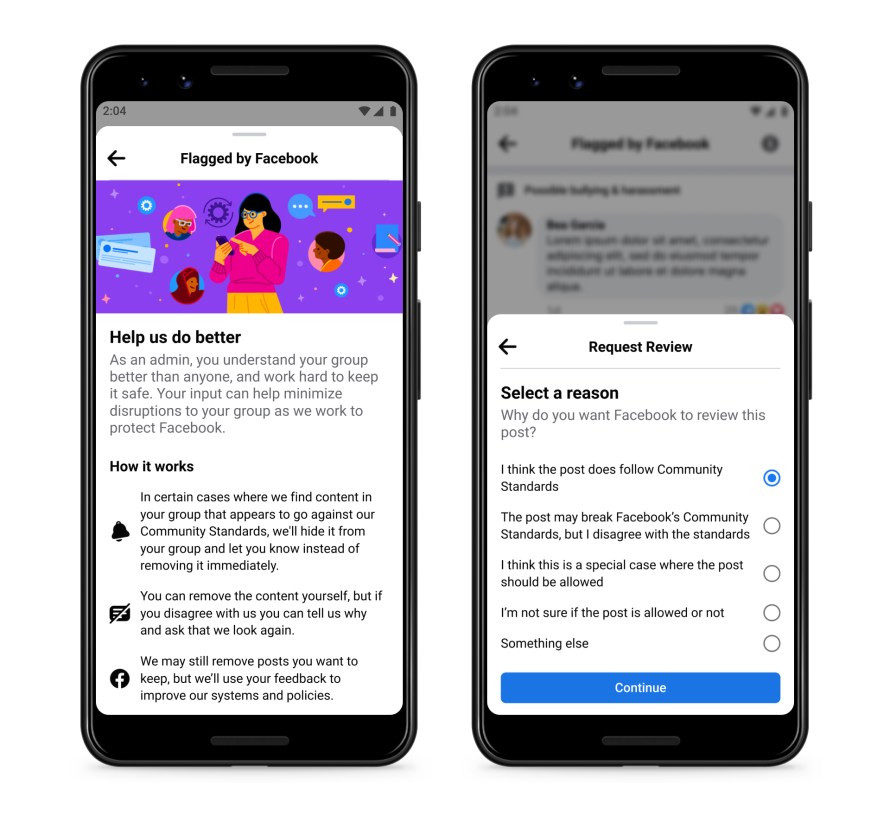

We also want to make sure that our enforcement is transparent and fair. That’s why we’re announcing Flagged by Facebook. This feature shows admins content that has been flagged for removal before it is shown to the broader community.

Admins can then either review and remove the content themselves, or ask for a review by Facebook and provide additional feedback on why they think that piece of content should remain on the platform. Flagged by Facebook involves admins in content review earlier in the process, before members receive a strike and content is removed.

Flagged by Facebook is in addition to the existing ability for admins to appeal when something is taken down for violating our Community Standards. This provides more fairness for communities and helps ensure that the right call is being made when it comes to enforcing our policies.

Originally published on March 17, 2021 at 8:00AM PT:

It’s important to us that people can discover and engage safely with Facebook groups so that they can connect with others around shared interests and life experiences. That’s why we’ve taken action to curb the spread of harmful content, like hate speech and misinformation, and made it harder for certain groups to operate or be discovered, whether they’re Public or Private. When a group repeatedly breaks our rules, we take it down entirely.

Today we’re sharing the latest in our ongoing work to keep Groups safe, which includes our thinking on how to keep recommendations safe as well as reducing privileges for those who break our rules. These changes will roll out globally over the coming months.

Improving Group Recommendations

We know we have a greater responsibility when we are amplifying or recommending content. As we work to make sure that potentially harmful groups aren’t recommended to people, we try to be careful not to penalize high-quality groups on similar topics. The tension we navigate isn’t between our business interests and removing low-quality groups — it’s about taking action on potentially harmful groups while still ensuring that community leaders can grow their groups that follow the rules and bring people value. We try to balance this carefully in our recommendations guidelines, which can be found here.

As behaviors evolve on our platform, though, we recognize we need to do more. This is why we recently removed civic and political groups, as well as newly created groups, from recommendations in the US.

While people can still invite friends to these groups or search for them, we have now started to expand these restrictions globally. This builds on restrictions we’ve made to recommendations, like removing health groups from these surfaces, as well as groups that repeatedly share misinformation.

We are also adding more nuance to our enforcement. When a group starts to violate our rules, we will now start showing them lower in recommendations, which means it’s less likely that people will discover them. This is similar to our approach in News Feed, where we show lower quality posts further down, so fewer people see them.

Restricting the Reach of Rule-Breaking Groups and Members

We believe that groups and members that violate our rules should have reduced privileges and reach, with restrictions getting more severe as they accrue more violations, until we remove them completely. And when necessary in cases of severe harm, we will outright remove groups and people without these steps in between.

Groups

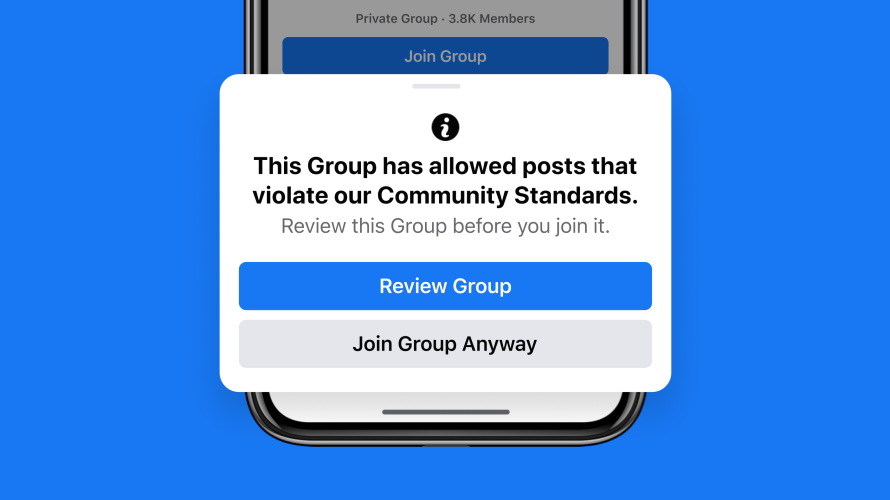

We’ll start to let people know when they’re about to join a group that has Community Standards violations, so they can make a more informed decision before joining. We’ll limit invite notifications for these groups, so people are less likely to join. For existing members, we’ll reduce the distribution of that group’s content so that it’s shown lower in News Feed. We think these measures as a whole, along with demoting groups in recommendations, will make it harder to discover and engage with groups that break our rules.

We will also start requiring admins and moderators to temporarily approve all posts when that group has a substantial number of members who have violated our policies or were part of other groups that were removed for breaking our rules. This means that content won’t be shown to the wider group until an admin or moderator reviews and approves it. If an admin or moderator repeatedly approves content that breaks our rules, we’ll take the entire group down.

Members

When someone has repeated violations in groups, we will block them from being able to post or comment for a period of time in any group. They also won’t be able to invite others to any groups, and won’t be able to create new groups. These measures are intended to help slow down the reach of those looking to use our platform for harmful purposes and build on existing restrictions we’ve put in place over the last year.

There is always more to do to keep Facebook Groups safe, and we will continue to build and invest to make sure people can rely on these places for connection and support.