AI gets better every day. Here’s what that means for stopping hate speech.

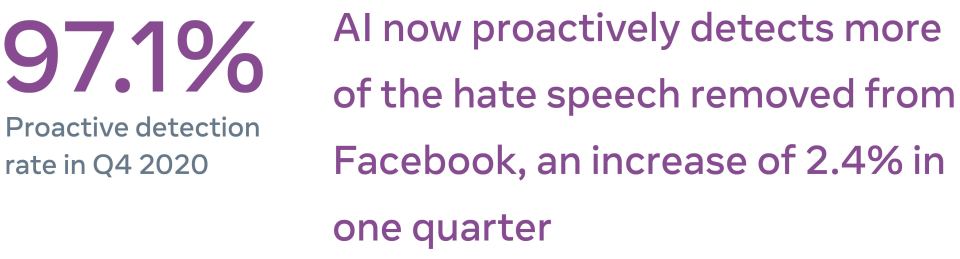

The numbers Facebook released today in our latest Community Standards Enforcement Report are evidence of the many ways technology is delivering the kind of progress our world demands. In the final three months of 2020, we did better than ever before to proactively detect hate speech and bullying and harassment content — 97% of hate speech taken down from Facebook was spotted by our automated systems before any human flagged it, up from 94% in the previous quarter and 80.5% in late 2019.

More importantly this is up from 24% in late 2017, a pace of improvement that we rarely see in technologies being deployed on such a scale. When you look at the times when new technology has helped address the hardest problems facing our world, from curing diseases to producing safer cars, progress happened incrementally over decades, as technologies were refined and improved.

I hear the same story of steady, continuous improvements these days when I talk to the engineers building AI systems that can prevent hate speech and other unwanted content from spreading across the internet.

What’s so encouraging is that the entire field of AI is advancing on a monthly basis, resulting in dramatic gains being made every single year.

Beneath those encouraging top-line numbers are stories of steady progress, driven by AI technology that is delivering better results even as the nature of the challenges evolves and people strive to evade detection by our systems.

One example of this is the way our systems are now detecting violating content in the comments of posts. This has historically been a challenge for AI, because determining whether a comment violates our policies often depends on the context of the post it is replying to. “This is great news” can mean entirely different things when it’s left beneath posts announcing the birth of a child and the death of a loved one.

Throughout 2020, our engineers worked to improve the way our AI systems analyze comments, considering both the comments themselves and their context. This required a better and deeper understanding of language as well as the ability to combine analyses of images, text, and other details contained in a post.

Like so much of the most important technological progress, this work wasn’t revolutionary but evolutionary. Our teams brought together better training data, better features, and better AI models to produce a system that is better at analyzing comments and continuously learning from new data. The results of these efforts are apparent in the numbers released today — in the first three months of 2020, our systems spotted just 16% of the bullying and harassment content that we took action on before anyone reported it. By the end of the year, that number had increased to almost 49%, meaning millions of additional pieces of content were detected and removed for violating our policies before anyone reported it. We expect more improvements to come as this field of AI technology continues to advance.

Another area of progress has been in the way our systems now operate in multiple languages. Thanks largely to improvements in the way our AI tools can detect violating content in widely spoken languages like Spanish and Arabic, the amount of hate speech content that was taken down reached 26.9 million, up from 22.1 million in the previous quarter.

The improvement in these foreign languages came about because a whole package of AI technologies made leaps forward in the past year. We’ve written before about Linformer, a new architecture that allows us to train AI models on longer and more complex pieces of text, and about RIO, a new system that allows our content moderation tools to constantly learn and improve based on new content being posted to Facebook each day.

What makes me particularly proud is not just that these cutting-edge technologies are making our platforms better and safer — it’s that we’ve published the research behind them and released the code, enabling academic researchers and engineers across the industry to work with what we’ve built.

There is still so much to be done, despite these encouraging improvements. One particular area of focus is getting AI even better at viewing content in context across languages, cultures, and geographies. The same words can often be interpreted as either benign or hateful, depending on where they’re published and who is reading them, and training machines to capture this nuance is especially challenging.

But like so many other challenges, we’re seeing continuous gains, and the steady enhancement of our AI capabilities shows no sign of slowing down. While 2020 was a year of ongoing improvement in the performance of our systems, it was also one during which our research scientists made fundamental breakthroughs that will move from their laboratories into our core systems faster than ever. I’m very confident that in the coming year, entirely new technologies will be revealed that will join forces with those that drove so much progress in 2020.