By Guy Rosen, VP of Product Management

We’re often asked how we decide what’s allowed on Facebook — and how much bad stuff is out there. For years, we’ve had Community Standards that explain what stays up and what comes down. Three weeks ago, for the first time, we published the internal guidelines we use to enforce those standards. And today we’re releasing numbers in a Community Standards Enforcement Report so that you can judge our performance for yourself.

Alex Schultz, our Vice President of Data Analytics, explains in more detail how exactly we measure what’s happening on Facebook in both this Hard Questions post and our guide to Understanding the Community Standards Enforcement Report. But it’s important to stress that this is very much a work in progress and we will likely change our methodology as we learn more about what’s important and what works.

This report covers our enforcement efforts between October 2017 to March 2018, and it covers six areas: graphic violence, adult nudity and sexual activity, terrorist propaganda, hate speech, spam, and fake accounts. The numbers show you:

- How much content people saw that violates our standards;

- How much content we removed; and

- How much content we detected proactively using our technology — before people who use Facebook reported it.

Most of the action we take to remove bad content is around spam and the fake accounts they use to distribute it. For example:

- We took down 837 million pieces of spam in Q1 2018 — nearly 100% of which we found and flagged before anyone reported it; and

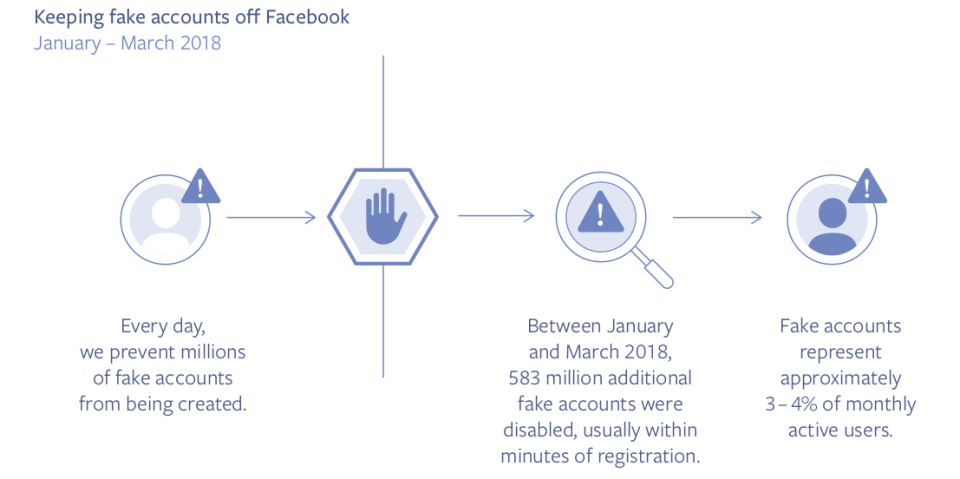

- The key to fighting spam is taking down the fake accounts that spread it. In Q1, we disabled about 583 million fake accounts — most of which were disabled within minutes of registration. This is in addition to the millions of fake account attempts we prevent daily from ever registering with Facebook. Overall, we estimate that around 3 to 4% of the active Facebook accounts on the site during this time period were still fake.

In terms of other types of violating content:

- We took down 21 million pieces of adult nudity and sexual activity in Q1 2018 — 96% of which was found and flagged by our technology before it was reported. Overall, we estimate that out of every 10,000 pieces of content viewed on Facebook, 7 to 9 views were of content that violated our adult nudity and pornography standards.

- For graphic violence, we took down or applied warning labels to about 3.5 million pieces of violent content in Q1 2018 — 86% of which was identified by our technology before it was reported to Facebook.

- For hate speech, our technology still doesn’t work that well and so it needs to be checked by our review teams. We removed 2.5 million pieces of hate speech in Q1 2018 — 38% of which was flagged by our technology.

As Mark Zuckerberg said at F8, we have a lot of work still to do to prevent abuse. It’s partly that technology like artificial intelligence, while promising, is still years away from being effective for most bad content because context is so important. For example, artificial intelligence isn’t good enough yet to determine whether someone is pushing hate or describing something that happened to them so they can raise awareness of the issue. And more generally, as I explained two weeks ago, technology needs large amounts of training data to recognize meaningful patterns of behavior, which we often lack in less widely used languages or for cases that are not often reported. In addition, in many areas — whether it’s spam, porn or fake accounts — we’re up against sophisticated adversaries who continually change tactics to circumvent our controls, which means we must continuously build and adapt our efforts. It’s why we’re investing heavily in more people and better technology to make Facebook safer for everyone.

It’s also why we are publishing this information. We believe that increased transparency tends to lead to increased accountability and responsibility over time, and publishing this information will push us to improve more quickly too. This is the same data we use to measure our progress internally — and you can now see it to judge our progress for yourselves. We look forward to your feedback.