Today we removed three separate networks for violating our policy against coordinated inauthentic behavior (CIB). Two of these networks targeted the United States, among other countries, and one network originated in and targeted domestic audiences in Myanmar.

In each case, the people behind this activity coordinated with one another and used fake accounts as a central part of their operations to mislead people about who they are and what they are doing, and that was the basis for our action. When we investigate and remove these operations, we focus on behavior rather than content, no matter who’s behind them, what they post, or whether they’re foreign or domestic.

Over the past three years, we’ve shared our findings on over 100 networks of coordinated inauthentic behavior we detected and removed from our platforms. Earlier this year, we started publishing monthly CIB reports where we share information about the networks we take down to make it easier for people to see progress we’re making in one place. In some cases, like today, we also share our findings soon after our enforcement. Today’s takedowns will also be included in our October report.

The networks we removed today were caught early in their operation, before they were able to build their audience. As it gets harder to go undetected for long periods of time, we see malicious actors attempt to play on our collective expectation of wide-spread interference to create the perception that they’re more impactful than they in fact are. We call it perception hacking — an attempt to weaponize uncertainty to sow distrust and division.

We saw it ahead of the 2018 midterm elections in the US when Russian actors falsely claimed they had a large presence on social media and control over the outcome of the midterm elections. We saw it again with one of the networks we took action on last week where the Iranian operators emailed people with unsubstantiated claims that they hacked into voting systems in the US — they also tried to spread this claim using an account on Facebook.

In recent weeks, government agencies, technology platforms and security experts have alerted the public to expect attempts to spread false information about the integrity of the election. We’re closely monitoring for potential scenarios where malicious actors around the world may use fictitious claims, including about compromised election infrastructure or inaccurate election outcomes, to suppress voter turnout or erode trust in the poll results, particularly in battleground states.

It’s important that we all stay vigilant, but also see these campaigns for what they are — small and ineffective. Overstating the importance of these campaigns is exactly what these malicious actors want, and we should not take the bait.

Our teams remain on high alert, and we’re working closely with other technology companies, law enforcement and independent researchers to find and remove influence operations. We will continue to share our findings publicly to provide context for the adversarial trends we see.

What We Found

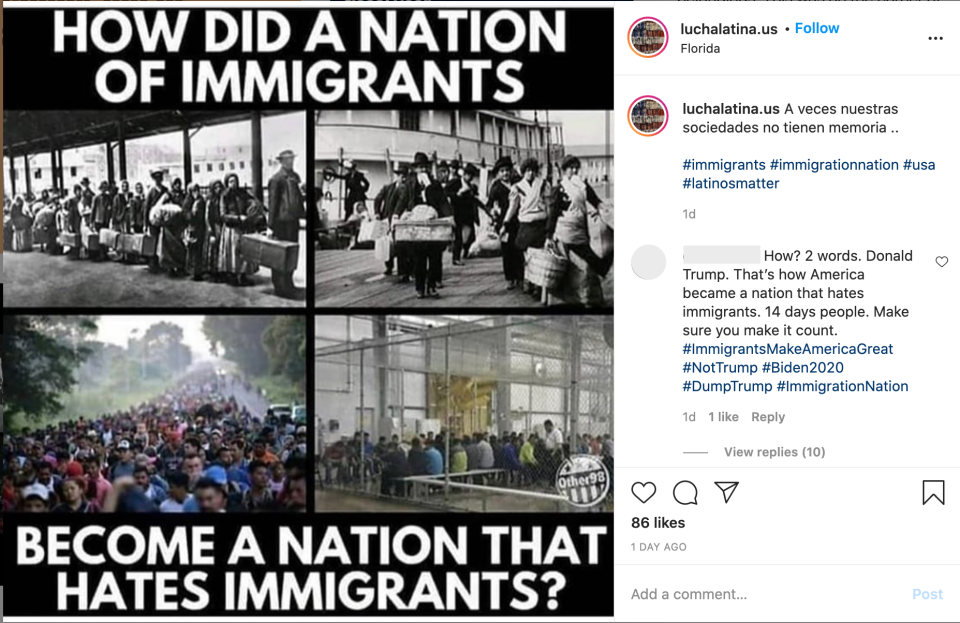

1. We removed 2 Facebook Pages and 22 Instagram accounts for violating our policy against foreign interference which is coordinated inauthentic behavior on behalf of a foreign entity. This small network was in the early stages of building an audience and was operated by individuals — some wittingly and some unwittingly — from Mexico and Venezuela. It primarily targeted the US.

This network began creating accounts in April 2020. The individuals behind this activity took steps to conceal their coordination and who is behind this network. Some of the people managing these accounts and Pages claimed to work for a Poland-linked firm Social CMS, which doesn’t appear to exist. They used fake accounts to create fictitious personas and post content. Some of these accounts posed as Americans supporting various social and political causes and tried to contact other people to amplify this operation’s content. These accounts followed, liked and occasionally commented on others’ posts to increase engagement on their own content.

The people behind this activity posted in Spanish and English about news and current events in the US including memes and other content about humor, race relations and racial injustice, feminism and gender relations, environmental issues and religion. A small portion of this content included memes posted by the Russian Internet Research Agency (IRA) in the past. They reused content shared across internet services by others, including screenshots of social media posts by public figures.

We began this investigation based on information about this network’s off-platform activity from the FBI. Our internal investigation revealed the full scope of this network on Facebook.

- Presence on Facebook and Instagram: 2 Facebook Pages and 22 Instagram accounts.

- Followers: 1 account followed one or more of these Pages and around 54,500 people followed one or more of these Instagram accounts (about 50% of which were in the US). The account with the highest following had under 15,000 followers.

Below is a sample of the content posted by some of these Pages and accounts:

2. We also removed 12 Facebook accounts, 6 Pages and 11 Instagram accounts for government interference which is coordinated inauthentic behavior on behalf of a government entity. This small network originated in Iran and focused primarily on the US and Israel.

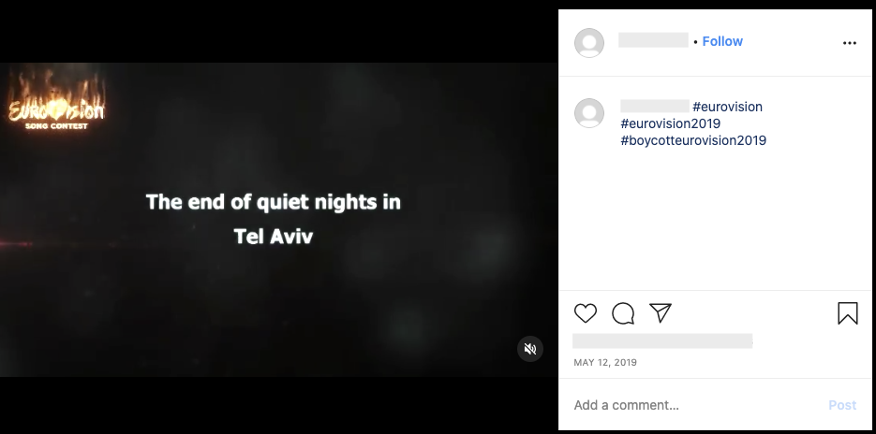

We began this investigation based on information from the FBI about this network’s off-platform activity. As a result, last week, we removed a single fake account created in October 2020 that attempted to seed false claims and unsubstantiated election-related threats as part of an influence operation carried out primarily via email. Our teams continued to investigate and found additional dormant accounts and Pages that had been largely inactive since May 2019 and focused primarily on Israel. This operation used fake accounts — some of which had been already detected and removed by our automated system. Some of these accounts tried to contact others, including an Afghanistan-focused media outlet, to spread their information. They focused on Saudi Arabia’s activities in the Middle East and claims about an alleged massacre at Eurovision, an international song contest, hosted by Israel in 2019.

Although the people behind this activity attempted to conceal their identity and coordination, our investigation found limited links to the CIB network we removed in April 2020 and connections to individuals associated with the Iranian government.

- Presence on Facebook and Instagram: 12 Facebook accounts, 6 Pages and 11 Instagram accounts.

- Followers: About 120 accounts followed one or more of these Pages and about 700 people followed one of more of these Instagram accounts.

Below is a sample of the content posted by some of these Pages and accounts:

3. We also removed 10 Facebook accounts, 8 Pages, 2 Groups and 2 Instagram accounts for coordinated inauthentic behavior. This network originated in Myanmar and focused on domestic audiences. (Updated on November 6, 2020 at 8:53AM PT to reflect the latest enforcement numbers.)

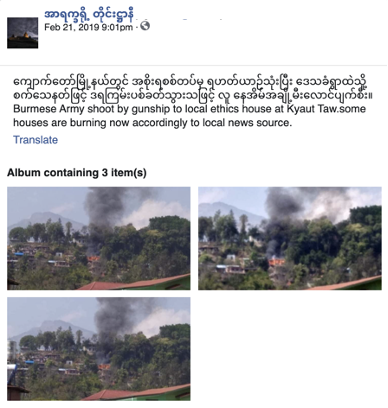

The people behind this activity used fake accounts to create fictitious personas, post content and manage Pages and Groups, some of which had already been detected and disabled by our automated systems for violating our spam, terrorism and hate speech policies. Some of these Pages impersonated others, including an official Tatmadaw Page. This network posted primarily in Burmese about current events in Rakhine state in Myanmar, including posts in support of the Arakan Army and criticism of Tatmadaw, Myanmar’s Armed forces. This activity did not appear to be directly focused on the November elections in Myanmar.

We found this network as part of our proactive investigation into suspected coordinated inauthentic behavior ahead of the upcoming election in the region.

- Presence on Facebook and Instagram: 10 Facebook accounts, 8 Pages, 2 Groups and 2 Instagram accounts. (Updated on November 6, 2020 at 8:53AM PT to reflect the latest numbers.)

- Followers: About 16,500 accounts followed one or more of these Pages, around 50 accounts joined one of more of these Groups and around 25 people followed one or more of these Instagram accounts.

Below is a sample of the content posted by some of these Pages and accounts:

Page title: Homeland of Arakans

Caption: Houses in Kyaut Taw Township were destroyed and burnt as a result of the Myanmar military using gunship helicopters and violently shooting at the village.